Encourage participants to give each other feedback, combine evaluations from multiple comparisons, and prioritize learning how to critique

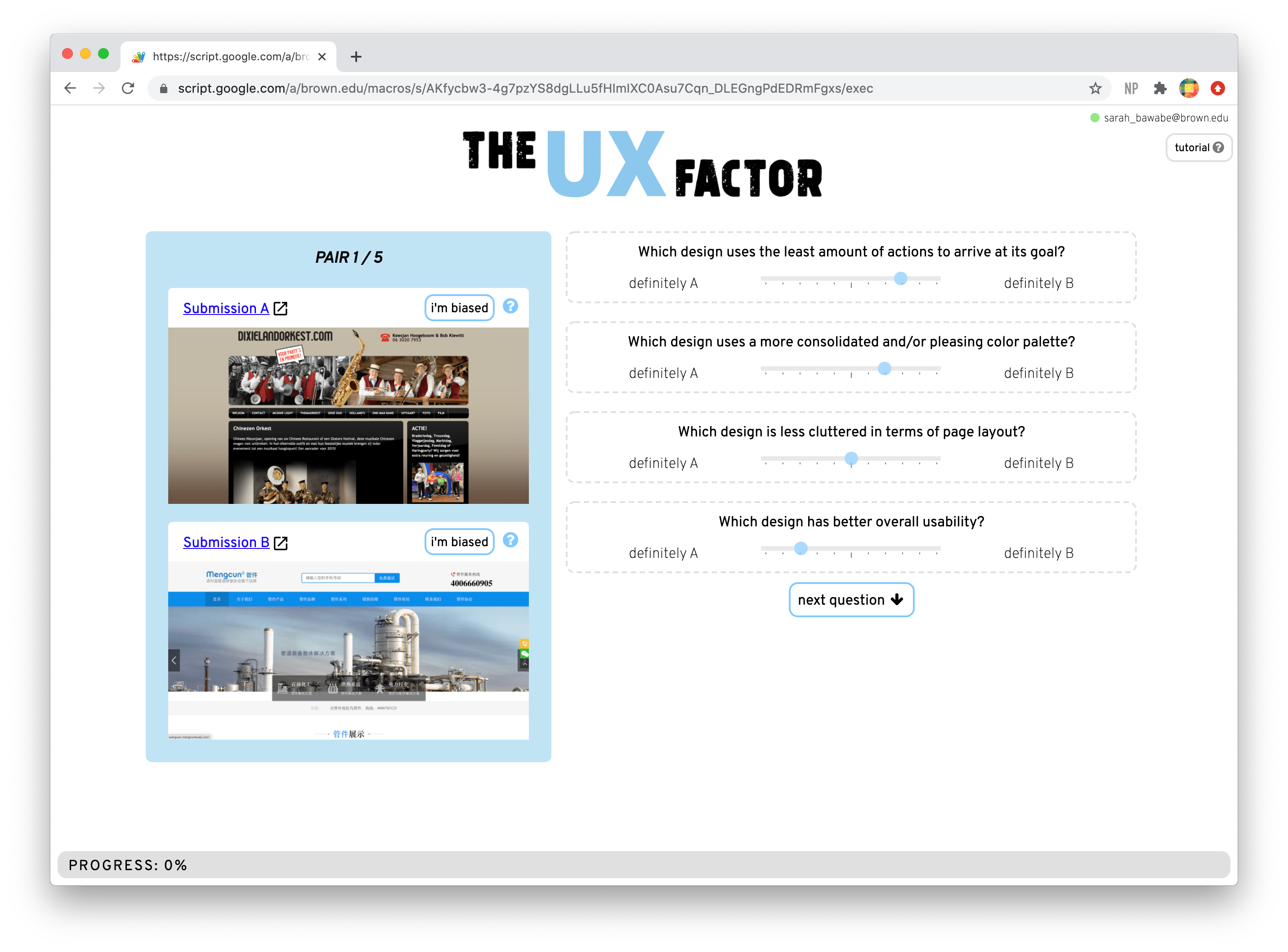

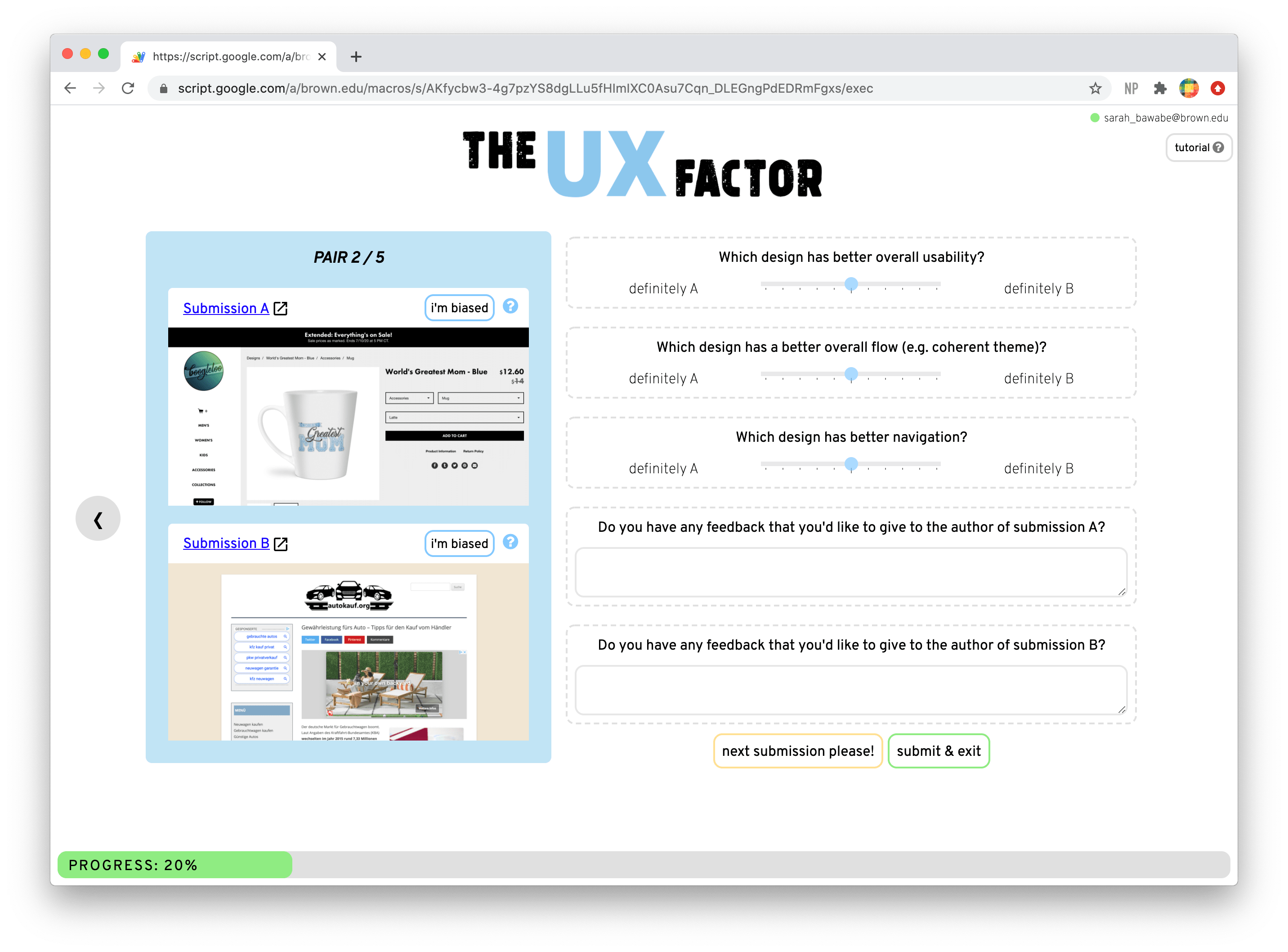

The UX Factor is an online peer evaluation platform that allows users to anonymously compare others' submissions and give their feedback. The goal of this is to allow participants (who are often students) to get as much feedback as possible, while still maintaining the anonymity of each submission. In order to quantitatively analyze each participant's submission (which also allows you to translate feedback into a grading report), the UX Factor asks pointed questions and ask participant to determine which submission, given a pair of two submissions, better addresses the task of the question. In this way, participants will not only receive helpful feedback based on their peers' responses, but UX Factor will also quantitatively suggest a grade that is, ideally, less biased than a grade that would be given solely by one evaluator.

The UX Factor has been used in large real-world settings. It has been used for assessing the majority of assignment submissions in a Brown University course on User Interfaces and User Experience, with over a hundred students. And it has been translated into Japanese for the College Creative Jam run by Adobe in Japan, where it was used as the main submission and feedback platform.

Read more about UX Factor in our publication The UX Factor: Using Comparative Peer Review to Evaluate Designs through User Preferences, in the Conference on Computer-Supported Cooperative Work and Social Computing (CSCW 2021). This paper received both an Impact Recognition Award, and an Honorable Mention Award, selected by two separate committees.

If you would like to use the UX Factor for your class or event, we would love to help you set it up or answer any questions for you. Please email us at hci-(at)-brown-(dot)-edu.

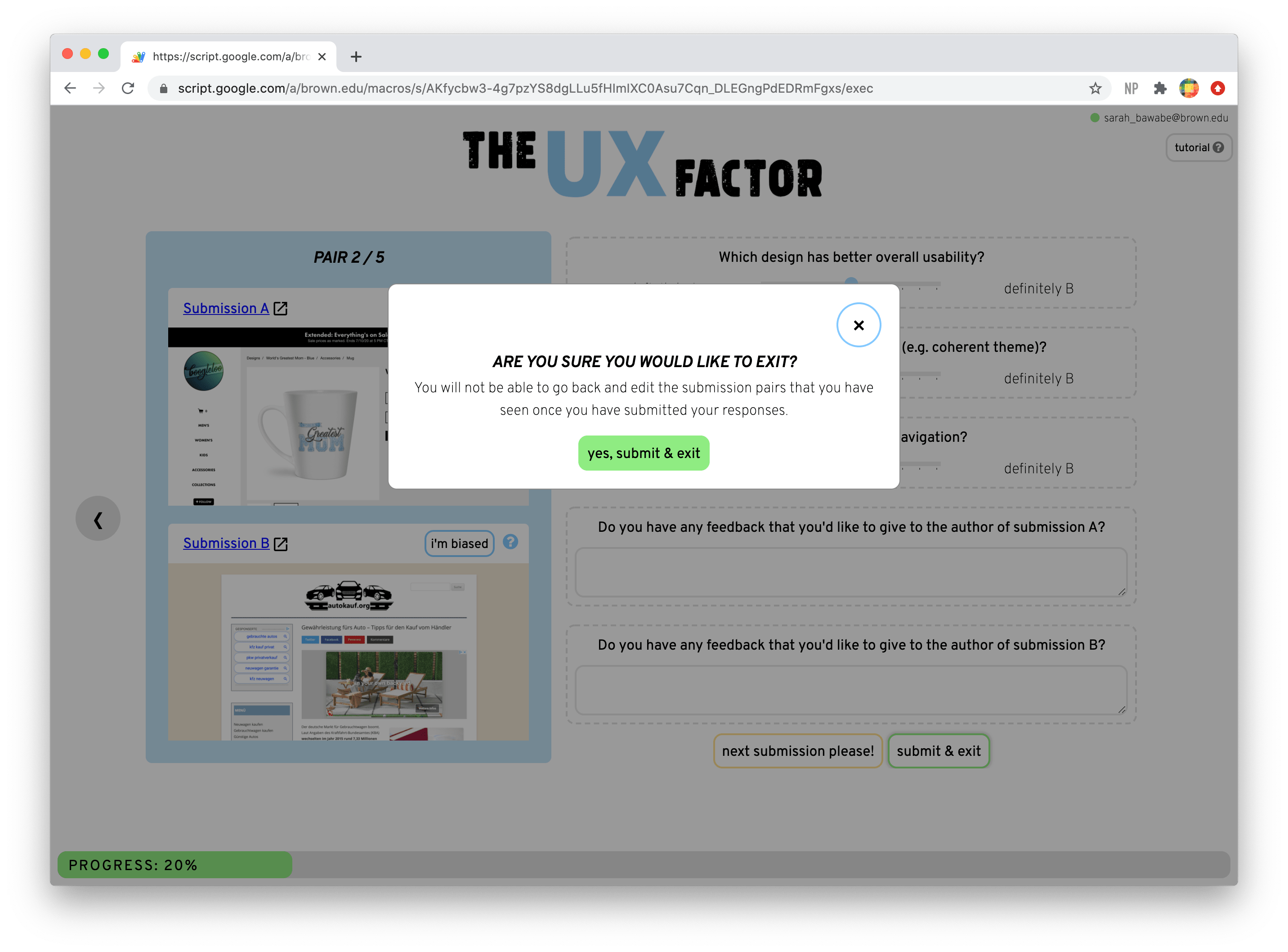

The goal of UX Factor is to allow participants in an activity to be part of the evaluation process, which becomes a form of peer review. Participants compare a random selection of two of their fellow participants' submissions in each round, selecting which one better addresses each of the criteria and optionally providing feedback. After the evaluation period, the UX Factor aggregates each submission's evaluations, generating a report for each participant with both the written feedback and how it compared against the other submissions. The resulting assessment is intended to be more precise than an evaluation by a single reviewer. Participants also gain perspective on their own submission when performing the comparisons themselves to see what parts of the submissions they think is important for each criteria.

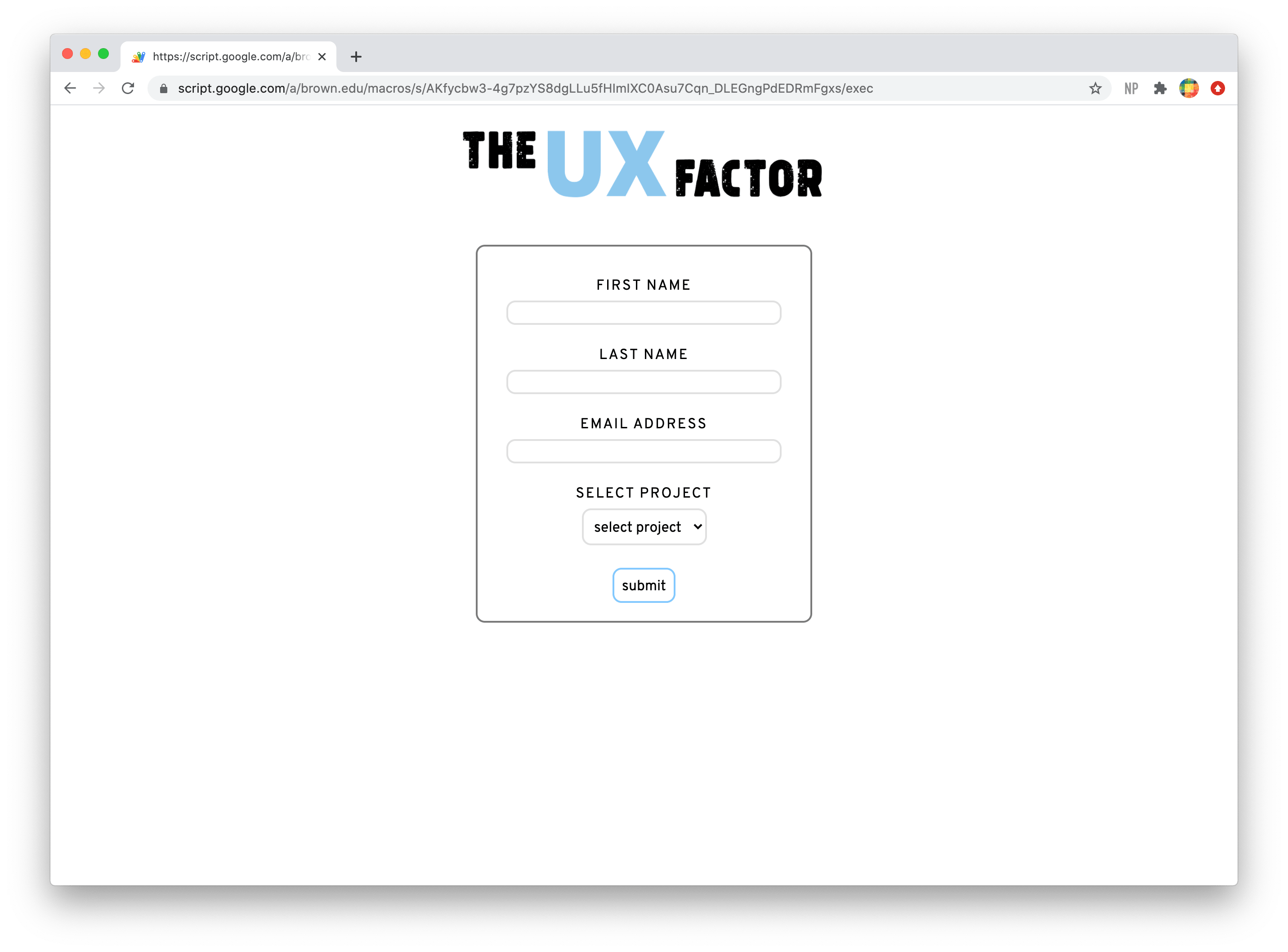

The UX Factor is run on top of Google Apps Script through various forms and spreadsheets. This app is most naturally used to evaluate user interfaces, which can range from webpage designs and PDF handins to sketches and more. This app can be used to compare any type of submission, given that there is a hyperlink for each submission.